Gradient texture support

Published Sunday, July 20, 2008 by Glow.

Just wanted to post a small video of a scene that uses a new feature that I've added: gradient textures. You can have variable gradients (with multiple colors), just like Photoshop has them. But only linear gradients (no support for angular, diamond, etc...).

And here's a screenshot of all the tools in action that are used for this effect (including the new gradient editor):

Ohw and I'm currently working on dynamic (physics-like) cables. Those will probably be the key-dynamic elements in our Evoke demo, and it'll probably also be the last core feature I'm working on, before starting on the demo itself.

And here's a screenshot of all the tools in action that are used for this effect (including the new gradient editor):

Ohw and I'm currently working on dynamic (physics-like) cables. Those will probably be the key-dynamic elements in our Evoke demo, and it'll probably also be the last core feature I'm working on, before starting on the demo itself.

Mesh subdivision added

Published Saturday, July 19, 2008 by Glow. Okay, I've finally implemented mesh subdivision. To be precise, I've implemented Loop's subdivision algorithm. It took a bit longer than expected, since I was trying to implement the wrong algorithm ;)

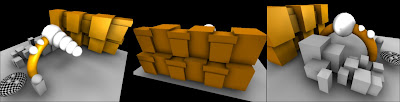

Okay, I've finally implemented mesh subdivision. To be precise, I've implemented Loop's subdivision algorithm. It took a bit longer than expected, since I was trying to implement the wrong algorithm ;)Anyway, it works great, and it really hides almost all of the geometry artifacts caused by the lossy compression of the vertices. The added screenshots are of the same polygonal model as in my previous post, but this time with 1 iteration of the subvision algorithm performed (quadrupling the polycount).

Polygonal meshes

Published Monday, July 14, 2008 by Glow. Here are some shots of a new feature I've been working on (and still am): polygonal meshes. I wasn't supporting polygonal objects before since they can really claim a lot of precious bytes, but Izard really needed them for his plans for our new intro. Luckily I didn't have to invent everything myself, since I found two really great sources of info on how to pack/compress such polygonal objects as efficient as possible: Iq's discussion of his technology from the 195/95/256 64k demo, and ryg's presentation of how to code compressor-friendly.

Here are some shots of a new feature I've been working on (and still am): polygonal meshes. I wasn't supporting polygonal objects before since they can really claim a lot of precious bytes, but Izard really needed them for his plans for our new intro. Luckily I didn't have to invent everything myself, since I found two really great sources of info on how to pack/compress such polygonal objects as efficient as possible: Iq's discussion of his technology from the 195/95/256 64k demo, and ryg's presentation of how to code compressor-friendly.Anyway I'm currently using several tricks to minimize the required space: polygon strips, only storing vertex positions and indices (regenerating normals and automapping uv's at loadtime), quantizizing vertex positions to 8bit per component, delta encoding all values, and reordering streams per component. And it seems to help a lot. I've been able to store a 19800 triangle/9902 vertices sphere into 74000 bytes, which compressed to 4520 bytes. Which is good enough, since I'll be having objects op 1k triangles max probably. (But the achieved compression ratio does vary per object, since it depends on used topology and geometry.)

There are two things I still have to implement though: auto UV generation and mesh subdivision (just like Maya's subdivs), for smoother surfaces without having to store all the polies. Both will be done at load-time.

A little bit more ambient occlusion

Published Monday, July 07, 2008 by Glow. Here are some screenies of another testscene with Ambient Occlusion turned on. AO is precalculated on the GPU and in this scene it's combined with dynamic lighting.

Here are some screenies of another testscene with Ambient Occlusion turned on. AO is precalculated on the GPU and in this scene it's combined with dynamic lighting.I had been working on AO a few months ago, but still hadn't taken the time to polish the code and make it actually functional. So now that I've done that we can use it in our next releases. It already looks quite cool in my testscenes, I'm wondering how it will look in larger scenes...

Seiryoku is released

Published Thursday, July 03, 2008 by Glow.

Okay we've done it, we've submitted our entry to the Intel Demo Competition 2008. We actually submitted it tuesday morning already, but the competition website wasn't active until yesterday.

Anyway, our demo is called Seiryoku, and it's actually the first full-sized demo we made so far. With a size limit of 64mb you can simply do a lot more than the 64kb restriction we're used to. So we planned right from the start to make our demo different than our 64kb intros. For this reason I've been very busy on building a custom demo engine called nerveHD. I've taken quite some days off from work to code a bit on nerveHD. But it still took a lot more effort than expected.

Our final result for the competition was more or less rushed together, to at least have something submitted. At the time of the deadline we actually didn't have any overlays and fading done, and we were still missing several models. Luckily we were granted some extra time to at least add those basic things to make it all look a bit more decent. (thanks tobi!)

But in the end, we're not that proud of this release. It could have been much better, if we'd simply had planned and scheduled things better. In the end we didn't have time to use our dynamic shadows, the dynamic/skinned cables, the posteffects and the morphing objects, for example. But on the other side: it was a really cool project for a really cool competition. We did a few things we hadn't done before, such as using polygonal models (instead of generating them from primitives), using prebaked global illumination (instead of using a simple realtime model) and using manually made textures (instead of generating them).

Ohw, and about the name of the demo: "Seiryoku" is Japanese for "influence; power; might; strength; potency; force; energy" which sounds oriental and also covers our demo quite nicely.

Please check out our entry and all other entries (which are really of top quality) for the Intel Demo Competition online at http://www.intel-demoscene.de. Since this is an online competition, you can also vote for your favourite entry at the same site.

Our entry can also be found at pouet.net (including user comments and download links) and I've also added a small video below:

That's it for now. We already started work on two new projects for the next upcoming party: Evoke 2008, held from August 8 to 10 in Köln, Germany.

Anyway, our demo is called Seiryoku, and it's actually the first full-sized demo we made so far. With a size limit of 64mb you can simply do a lot more than the 64kb restriction we're used to. So we planned right from the start to make our demo different than our 64kb intros. For this reason I've been very busy on building a custom demo engine called nerveHD. I've taken quite some days off from work to code a bit on nerveHD. But it still took a lot more effort than expected.

Our final result for the competition was more or less rushed together, to at least have something submitted. At the time of the deadline we actually didn't have any overlays and fading done, and we were still missing several models. Luckily we were granted some extra time to at least add those basic things to make it all look a bit more decent. (thanks tobi!)

But in the end, we're not that proud of this release. It could have been much better, if we'd simply had planned and scheduled things better. In the end we didn't have time to use our dynamic shadows, the dynamic/skinned cables, the posteffects and the morphing objects, for example. But on the other side: it was a really cool project for a really cool competition. We did a few things we hadn't done before, such as using polygonal models (instead of generating them from primitives), using prebaked global illumination (instead of using a simple realtime model) and using manually made textures (instead of generating them).

Ohw, and about the name of the demo: "Seiryoku" is Japanese for "influence; power; might; strength; potency; force; energy" which sounds oriental and also covers our demo quite nicely.

Please check out our entry and all other entries (which are really of top quality) for the Intel Demo Competition online at http://www.intel-demoscene.de. Since this is an online competition, you can also vote for your favourite entry at the same site.

Our entry can also be found at pouet.net (including user comments and download links) and I've also added a small video below:

That's it for now. We already started work on two new projects for the next upcoming party: Evoke 2008, held from August 8 to 10 in Köln, Germany.

About me

- I'm Glow

- From

- My profile

- My portfolio

- My pictures

Search

Last posts

Archives

- January 2006

- April 2006

- May 2006

- June 2006

- July 2006

- August 2006

- October 2006

- November 2006

- February 2007

- March 2007

- April 2007

- May 2007

- June 2007

- July 2007

- August 2007

- September 2007

- November 2007

- January 2008

- February 2008

- March 2008

- May 2008

- June 2008

- July 2008

- August 2008

- October 2008

- April 2009

- May 2009

- August 2009

- January 2010

- March 2010

- April 2010

- May 2010

- July 2010

- August 2010

- August 2011

- May 2013